#data annotator

Explore tagged Tumblr posts

Text

Project Description: Bounding Box Bee Annotation on Supervisely

I recently completed a project for a client, creating bounding boxes for bees using the Supervisely platform. This task required precise and accurate annotations to enhance machine learning models for insect detection.

Key Features:

Platform Used: Supervisely Task: Creating bounding boxes around bees in images Objective: Accurate labeling for machine learning models Why Hire Me?

Expertise in Image Annotation Proficiency with Supervisely Commitment to Quality and Accuracy For reliable and skilled data annotation services, visit my Fiverr page- https://www.fiverr.com/s/7YXxG94

#image annotation services#ai data annotator#artificial intelligence#ai image#annotation#annotations#machinelearning#ai data annotator jobs#data annotator#ai

0 notes

Text

DELTA IS EMPLOYED. DELTA HAS A JOB! DELTA STARTS ON JUNE 3. DELTA IS SO EXCITED TO FINALLY NOT BE STRUGGLING FOR DOLLARS THAT SHE MIGHT EXPLODE

#windchime song#delta EMPLOYAGON#its a work from home job too!!!#100% remote doing data annotation#incredibly boring but its WORK AND IT MEANS MONEY

29 notes

·

View notes

Text

Fascinated by how skinny Nusu's legs are. What if we'd gotten to see the rest of Kusu's, would they also be that skinny? Is Nusu smaller than the average Medicine Seller, or are they all just tiny little whisps under all those clothes? We've seen more than we've ever seen before and yet all I have is more questions.

#mononoke#karakasa#kusuriuri#I need to see official art of them side by side for comparison#preferably annotated with their precise measurements#I need data

40 notes

·

View notes

Text

maybe it was a mistake to move bc if i had stayed in MA i could have continued tapping into a local network and would probably be working at umass right now :/ also it would have been so much easier to get on food stamps and medicaid. bc i am not eligible for either of those things in tx. and i wasn't even eligible for tx unemployment i had to go through ma unemployment. do the texas republican voters know they don't have to live like this and that things could be better and they could have an actual social safety net

#i have applied for every possible city and county job but they are moving So So So slow#december is a traditionally awful mental health time and im trying really hard to line up SOMETHING ANYTHING before my mental health absolu#ely flatlines next week due to a bad anniversary#i can't even get a job annotating AI data or factchecking AI data

9 notes

·

View notes

Text

my back really hates enthusiasm. woke up not eager but ready to get started on multiple things at once and it really said 'oh? you want to be able to move without pain? fuck you'

But fuck my back say I, bc I can work on the grocery pick up while laying down. Check mate, you... spine.

(turns out nothing i can think of to say/type to shame my spine sounds effective. it all just sounds vaguely clinical lmao)

#text post#brain woke up in a weird place too and im trying to push past that#it's not that i have A Lot to do today it's that the timing needs to be mindful#if i start laundry too late then that fucks up lunch#in between that i need to get grocery pick up finished and figured out and start on prolific and cloud#then to check on data annotation stuff#i should at least take some pictures to post for the side gig#and i need to finish up the holiday cards and gift boxes for my family bc those need to be shipped out asap#...maybe my back is trying to give me a gift lmao. i can attempt productivity so long as I'm also laying flat on my back#...my laptop can be brought down to sit in my actual lap so really the survey sites/grocery/pictures stuff could be done here#and the cards are closeish by#so then it's just laundry and gift boxes to do while physical moving#FUCK AND DISHES. i almost fuckin forgor them. the dish#...im gonna wind up doing laundry tomorrow aren't i. im not actually getting that done today and i should probably accept that now#not gonna tho! gonna be mad abt it later but i really should have started laundry#before i let myself lay down and that's on me

6 notes

·

View notes

Text

okay alright okay I can get through my workload tonight I absolutely can do it I am going to get through all of these assignments and it will be fine!

#I have: two discussion posts. an annotation assignment. notes to make for a group project. data analysis. and some additional research.#tomorrow I've gotta do a reference interview and then do some more research.#and then I have some presentation slides and a draft final.#okay I got this it's cool it's fine!#megs vs mlis

52 notes

·

View notes

Text

that post where it’s like “jobs these days are only sandwich maker or job at death factory” and for english majors the job at death factory is data annotation

#data annotation needs to stop showing up on my linkedin lmfao i KNOW you pay $27 CAD per hour#I KNOW YOU ARE TEMPTING BUT YOU ARE LITERALLY THE DEATH FACTORY MAKING THE WORLD WORSE#anyway im gonna make a banana bread matcha now ..

5 notes

·

View notes

Text

ANNOTATE DATA TO INCREASE USEFULNESS

ANNOTATE AND LINK DOCUMENTS AND FILES

11 notes

·

View notes

Text

The Rise of Data Annotation Services in Machine Learning Projects

4 notes

·

View notes

Text

My SD card isn't being read by my phone I wanna punch something

9 notes

·

View notes

Text

Pollution Annotation / Pollution Detection

Pollution annotation involves labeling environmental data to identify and classify pollutants. This includes marking specific areas in images or videos and categorizing pollutant types. ### Key Aspects: - **Image/Video Labeling:** Using bounding boxes, polygons, keypoints, and semantic segmentation. - **Data Tagging:** Adding metadata about pollutants. - **Quality Control:** Ensuring annotation accuracy and consistency. ### Applications: - Environmental monitoring - Research - Training machine learning models Pollution annotation is crucial for effective pollution detection, monitoring, and mitigation strategies. AigorX Data annotationsData LabelerDataAnnotationData Annotation and Labeling.inc (DAL)DataAnnotation Fiverr Link- https://lnkd.in/gM2bHqWX

#image annotation services#artificial intelligence#annotation#machinelearning#annotations#ai data annotator#ai image#ai#ai data annotator jobs#data annotator#video annotation#image labeling

0 notes

Text

quick yaoi break (reading week) almost over time to do all the schoolwork ive been ignoring

5 notes

·

View notes

Text

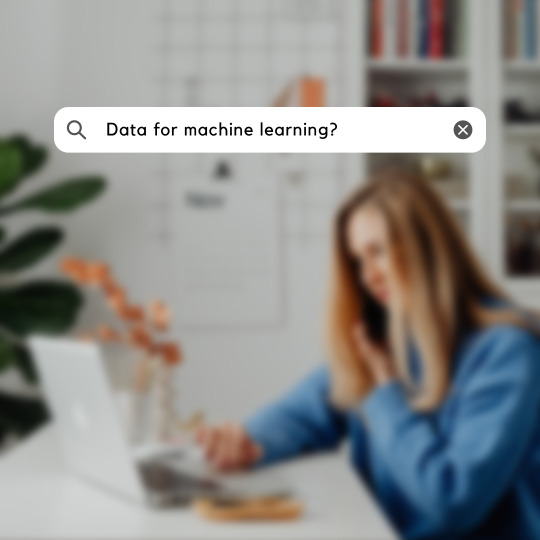

Our IT Services

With more than 7 years of experience in the data annotation industry, LTS Global Digital Services has been honored to receive major domestic awards and trust from significant customers in the US, Germany, Korea, and Japan. Besides, having experienced hundreds of projects in different fields such as Automobile, Retail, Manufacturing, Construction, and Sports, our company confidently completes projects and ensures accuracy of up to 99.9%. This has also been confirmed by 97% of customers using the service.

If you are looking for an outsourcing company that meets the above criteria, contact LTS Global Digital Service for advice and trial!

2 notes

·

View notes

Text

Now Hiring!!! This Ireland-based tech company is hiring international workers for the position of Map Evaluator. Purely Work at home part-time or full-time. Experience is not necessary. No phone/online interview. Click or copy the link to apply.

https://bit.ly/3IzrLeD

3 notes

·

View notes

Text

If anyone wants to work from home grading AI model responses for about $20/hr, dm me and I can send you my referral code. I’ve done just over 5 hours of work and have made about $110 so far

#the company is data annotations#it’s been amazing so far tbh bc I’m otherwise unemployed and need money#I do get $10 for referring people but only after they make at least $100 doing work#it’s definitely not for everyone but if you enjoy researching things and writing it’s actually pretty fun!#and if you know coding some of the projects pay up to $40/hr#while it’s def legit it’s not really something to live off unless your bills are incredibly low or you’re REALLY committed

2 notes

·

View notes

Text

What is a Data pipeline for Machine Learning?

As machine learning technologies continue to advance, the need for high-quality data has become increasingly important. Data is the lifeblood of computer vision applications, as it provides the foundation for machine learning algorithms to learn and recognize patterns within images or video. Without high-quality data, computer vision models will not be able to effectively identify objects, recognize faces, or accurately track movements.

Machine learning algorithms require large amounts of data to learn and identify patterns, and this is especially true for computer vision, which deals with visual data. By providing annotated data that identifies objects within images and provides context around them, machine learning algorithms can more accurately detect and identify similar objects within new images.

Moreover, data is also essential in validating computer vision models. Once a model has been trained, it is important to test its accuracy and performance on new data. This requires additional labeled data to evaluate the model's performance. Without this validation data, it is impossible to accurately determine the effectiveness of the model.

Data Requirement at multiple ML stage

Data is required at various stages in the development of computer vision systems.

Here are some key stages where data is required:

Training: In the training phase, a large amount of labeled data is required to teach the machine learning algorithm to recognize patterns and make accurate predictions. The labeled data is used to train the algorithm to identify objects, faces, gestures, and other features in images or videos.

Validation: Once the algorithm has been trained, it is essential to validate its performance on a separate set of labeled data. This helps to ensure that the algorithm has learned the appropriate features and can generalize well to new data.

Testing: Testing is typically done on real-world data to assess the performance of the model in the field. This helps to identify any limitations or areas for improvement in the model and the data it was trained on.

Re-training: After testing, the model may need to be re-trained with additional data or re-labeled data to address any issues or limitations discovered in the testing phase.

In addition to these key stages, data is also required for ongoing model maintenance and improvement. As new data becomes available, it can be used to refine and improve the performance of the model over time.

Types of Data used in ML model preparation

The team has to work on various types of data at each stage of model development.

Streamline, structured, and unstructured data are all important when creating computer vision models, as they can each provide valuable insights and information that can be used to train the model.

Streamline data refers to data that is captured in real-time or near real-time from a single source. This can include data from sensors, cameras, or other monitoring devices that capture information about a particular environment or process.

Structured data, on the other hand, refers to data that is organized in a specific format, such as a database or spreadsheet. This type of data can be easier to work with and analyze, as it is already formatted in a way that can be easily understood by the computer.

Unstructured data includes any type of data that is not organized in a specific way, such as text, images, or video. This type of data can be more difficult to work with, but it can also provide valuable insights that may not be captured by structured data alone.

When creating a computer vision model, it is important to consider all three types of data in order to get a complete picture of the environment or process being analyzed. This can involve using a combination of sensors and cameras to capture streamline data, organizing structured data in a database or spreadsheet, and using machine learning algorithms to analyze and make sense of unstructured data such as images or text. By leveraging all three types of data, it is possible to create a more robust and accurate computer vision model.

Data Pipeline for machine learning

The data pipeline for machine learning involves a series of steps, starting from collecting raw data to deploying the final model. Each step is critical in ensuring the model is trained on high-quality data and performs well on new inputs in the real world.

Below is the description of the steps involved in a typical data pipeline for machine learning and computer vision:

Data Collection: The first step is to collect raw data in the form of images or videos. This can be done through various sources such as publicly available datasets, web scraping, or data acquisition from hardware devices.

Data Cleaning: The collected data often contains noise, missing values, or inconsistencies that can negatively affect the performance of the model. Hence, data cleaning is performed to remove any such issues and ensure the data is ready for annotation.

Data Annotation: In this step, experts annotate the images with labels to make it easier for the model to learn from the data. Data annotation can be in the form of bounding boxes, polygons, or pixel-level segmentation masks.

Data Augmentation: To increase the diversity of the data and prevent overfitting, data augmentation techniques are applied to the annotated data. These techniques include random cropping, flipping, rotation, and color jittering.

Data Splitting: The annotated data is split into training, validation, and testing sets. The training set is used to train the model, the validation set is used to tune the hyperparameters and prevent overfitting, and the testing set is used to evaluate the final performance of the model.

Model Training: The next step is to train the computer vision model using the annotated and augmented data. This involves selecting an appropriate architecture, loss function, and optimization algorithm, and tuning the hyperparameters to achieve the best performance.

Model Evaluation: Once the model is trained, it is evaluated on the testing set to measure its performance. Metrics such as accuracy, precision, recall, and score are computed to assess the model's performance.

Model Deployment: The final step is to deploy the model in the production environment, where it can be used to solve real-world computer vision problems. This involves integrating the model into the target system and ensuring it can handle new inputs and operate in real time.

TagX Data as a Service

Data as a service (DaaS) refers to the provision of data by a company to other companies. TagX provides DaaS to AI companies by collecting, preparing, and annotating data that can be used to train and test AI models.

Here’s a more detailed explanation of how TagX provides DaaS to AI companies:

Data Collection: TagX collects a wide range of data from various sources such as public data sets, proprietary data, and third-party providers. This data includes image, video, text, and audio data that can be used to train AI models for various use cases.

Data Preparation: Once the data is collected, TagX prepares the data for use in AI models by cleaning, normalizing, and formatting the data. This ensures that the data is in a format that can be easily used by AI models.

Data Annotation: TagX uses a team of annotators to label and tag the data, identifying specific attributes and features that will be used by the AI models. This includes image annotation, video annotation, text annotation, and audio annotation. This step is crucial for the training of AI models, as the models learn from the labeled data.

Data Governance: TagX ensures that the data is properly managed and governed, including data privacy and security. We follow data governance best practices and regulations to ensure that the data provided is trustworthy and compliant with regulations.

Data Monitoring: TagX continuously monitors the data and updates it as needed to ensure that it is relevant and up-to-date. This helps to ensure that the AI models trained using our data are accurate and reliable.

By providing data as a service, TagX makes it easy for AI companies to access high-quality, relevant data that can be used to train and test AI models. This helps AI companies to improve the speed, quality, and reliability of their models, and reduce the time and cost of developing AI systems. Additionally, by providing data that is properly annotated and managed, the AI models developed can be exp

2 notes

·

View notes